Are you considering automating your DevOps and building a CI/CD pipeline with Jenkins, Docker, and Kubernetes? Then you have landed in the right place.

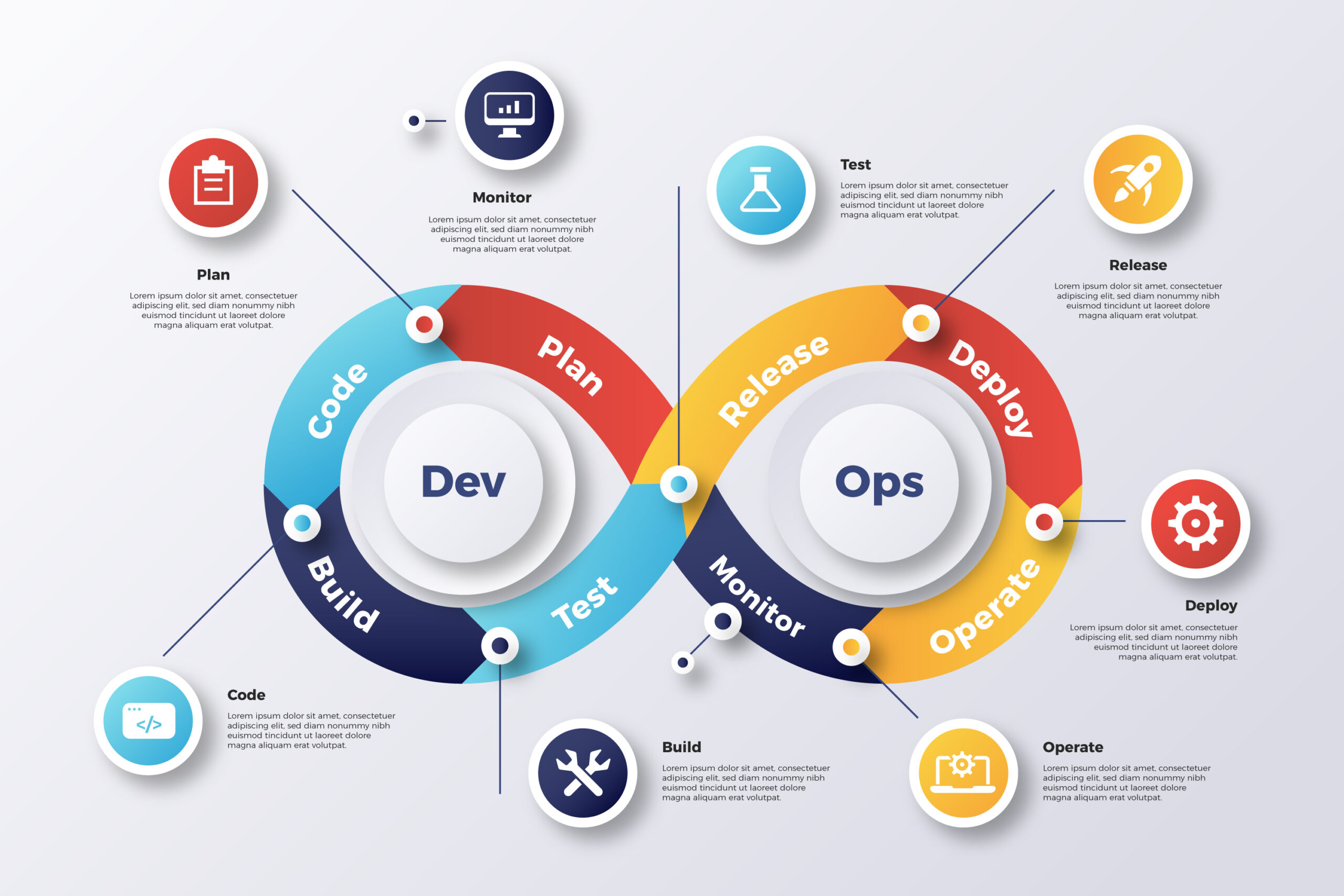

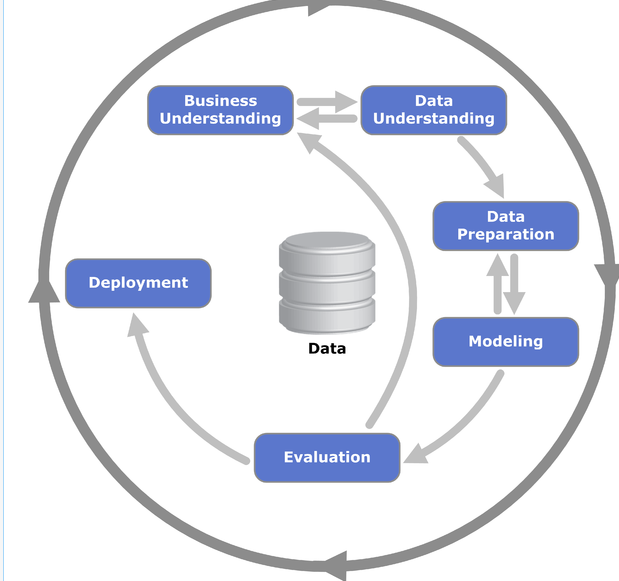

Automating the software delivery process is crucial in today’s fast-paced development landscape. This is where CI/CD Pipelines come in. CI/CD, or Continuous Integration and Continuous Delivery/Deployment, automates tasks like building, testing, and deploying your application, leading to faster releases and improved code quality.

This blog walks you through creating a CI/CD pipeline using a powerful trio: Jenkins, Docker, and Kubernetes.

Prerequisites:

- A running Jenkins server

- Basic understanding of Docker and Kubernetes concepts

- A Dockerfile for your application

- A Kubernetes deployment manifest file (e.g., deployment.yaml)

Dependencies:

Jenkins Plugins:

- Docker Pipeline Plugin

- Kubernetes Plugin

CI/CD with Jenkins, Docker and Kubernetes: A Step-by-Step Guide

1. Install Jenkins Plugins:

- Log in to your Jenkins dashboard and navigate to “Manage Jenkins” -> “Manage Plugins.”

- Install the “Docker Pipeline Plugin” and “Kubernetes Plugin.”

2. Create a New Pipeline Job:

- Click on “New Item” and select “Pipeline” as the job type. Name your pipeline appropriately.

3. Define Pipeline Script (Jenkinsfile):

- You can define the pipeline script directly in the Jenkins interface or use a separate Jenkinsfile stored in your version control system. Here’s an example Jenkinsfile using a shared library for cleaner code organization:

Groovy

library 'shared-lib' // Replace with path to your shared library

pipeline {

agent any

environment {

DOCKER_REGISTRY = 'your_docker_registry' // Replace with your registry URL

REGISTRY_USERNAME = 'your_username'

REGISTRY_PASSWORD = 'your_password' // Use credentials plugin for secure storage

}

stages {

stage('Checkout Code') {

steps {

git branch: 'master', credentialsId: 'your_git_credentials_id', url: 'https://github.com/your-repo.git'

}

}

stage('Build Docker Image') {

steps {

script {

dockerImage "maven:3.8.5-jre-alpine" // Build image with desired tools

sh 'mvn package' // Build your application using your build command

docker login $DOCKER_REGISTRY -u $REGISTRY_USERNAME -p $REGISTRY_PASSWORD

sh 'docker build -t ${DOCKER_REGISTRY}/${IMAGE_NAME}:${BUILD_NUMBER} .' // Build and tag image with version

docker push ${DOCKER_REGISTRY}/${IMAGE_NAME}:${BUILD_NUMBER}

}

}

}

stage('Deploy to Kubernetes') {

steps {

kubernetesDeploy config: 'kubernetes-config', namespace: 'your-namespace', applyDuring: 'SUCCESS'

}

}

}

}

Explanation:

The shared-lib references a separate library file containing reusable pipeline stages.

Environment variables store Docker registry details (replace with your values).

The pipeline is broken down into stages:

- Checkout Code: Retrieves code from your version control system.

- Build Docker Image: Builds a Docker image using the provided Dockerfile and pushes it to your registry.

- Deploy to Kubernetes: Deploys the image to your Kubernetes cluster using the

kubernetesDeploystep and a pre-configured deployment manifest file (kubernetes-config).

4. Configure Kubernetes Credentials:

- Go to “Credentials” under “Manage Jenkins”.

- Create a new credential of type “Secret text” to store your Kubernetes cluster configuration details (e.g., server address, CA certificate).

5. Configure Kubernetes Deployment (Optional):

- If your deployment manifest file (

deployment.yaml) isn’t stored in your version control system, you can define it directly in the Jenkins job configuration under “Kubernetes Apply Configuration.”

6. Save and Run the Pipeline:

- Save your Jenkinsfile configuration.

- Click on “Build Now” to trigger the pipeline and observe the build process.

Important Notes:

- Secure your credentials using the Jenkins Credentials plugin for sensitive information like passwords.

- Consider adding additional stages like running unit tests before deployment.

- This is a basic example. You can customize the pipeline further based on your specific needs.

Code Snippet for Shared Library (shared-lib):

Groovy

def stage(String name, Closure body) {

stage(name) {

steps {

body()

}

}

}

Explanation:

This code defines a reusable function named stage. It takes two arguments:

name: The name of the pipeline stage (e.g., “Checkout Code”).body: A closure (block of code) that defines the steps to be executed within that stage.

By using this function, you can create cleaner and more modular Jenkinsfiles, improving readability and maintainability.

Additional Considerations for CI/CD with Jenkins, Docker and Kubernetes

- Error Handling: Implement robust error handling mechanisms within your pipeline to gracefully handle failures and notify developers. Utilize features like

catchErrorwithin your Jenkinsfile to define actions in case of errors during specific stages. - Version Control Integration: Integrate your Jenkinsfile with a version control system like Git for tracking changes, rollbacks, and collaboration.

- Pipeline Triggers: Configure your pipeline to be triggered automatically upon code changes in your version control system. Jenkins offers options for triggering builds based on specific events (e.g., push to master branch).

- Multi-branch Pipelines: Consider using Jenkins’ multi-branch pipeline capabilities to manage pipelines for different branches or environments.

- Containerization of Builds: Explore containerizing your build environment using Docker for increased consistency and isolation across different build machines.

Conclusion

By leveraging Jenkins, Docker, and Kubernetes, you can establish a powerful and automated CI/CD pipeline for streamlined software delivery. This blog provides a step-by-step guide to get you started. Remember to tailor the pipeline to your specific needs and incorporate best practices for error handling, version control, and triggering mechanisms. With a well-designed CI/CD pipeline, you can achieve faster deployments, improved code quality, and a more efficient development workflow.

FAQs: CI/CD with Jenkins, Docker, and Kubernetes

This section addresses frequently asked questions regarding CI/CD pipelines using Jenkins, Docker, and Kubernetes:

1. What are the benefits of using this approach for a CI/CD pipeline?

- Automation: Automates tasks like building, testing, and deploying your application, saving development time and effort.

- Consistency: Ensures consistent build and deployment processes across environments.

- Scalability: The containerized approach with Docker and Kubernetes facilitates easy scaling of your deployments.

- Improved Code Quality: Integration with testing stages allows for early detection and resolution of bugs.

- Faster Releases: Enables faster and more frequent deployments through automation.

2. Are there any alternatives to using Docker and Kubernetes in this pipeline?

Yes, alternative containerization technologies like Podman or CRI-O can be used with Jenkins. Additionally, some cloud platforms offer managed container orchestration services that might be suitable depending on your specific environment.

3. How can I secure my CI/CD pipeline?

- Implement secure credential management within Jenkins using the Credentials plugin to store sensitive information like Docker registry passwords and Kubernetes cluster credentials.

- Regularly update Jenkins and plugins to address security vulnerabilities.

- Consider restricting access to specific functionalities within Jenkins using user roles and permissions.

4. What are some best practices for managing CI/CD pipelines?

- Version control your Jenkinsfile: Store your pipeline scripts in version control for tracking changes and facilitating rollbacks.

- Modular design: Break down your pipeline into smaller, reusable stages for better maintainability.

- Monitor and log: Monitor your pipeline executions and capture logs for troubleshooting purposes.

- Test and iterate: Regularly test and refine your pipeline to ensure optimal performance.

5. This seems complex. Is this approach suitable for small teams?

The complexity depends on your specific needs. While Jenkins, Docker, and Kubernetes offer powerful functionalities, they can have a learning curve. For smaller teams, exploring managed CI/CD services offered by cloud platforms might be a simpler alternative, especially during the initial stages. However, the approach outlined in this blog provides a scalable and customizable solution that can grow with your development team.

6. What troubleshooting steps can I take if my pipeline fails?

- Consult Jenkins logs: The Jenkins job console output provides detailed logs for each stage of your pipeline. Analyze these logs to identify the point of failure and its potential cause.

- Utilize debugging tools: Leverage debugging tools specific to your build environment (e.g., Docker logs, Kubernetes pod logs) to gain deeper insights into the issue.

- Review your Jenkinsfile: Double-check your pipeline script (Jenkinsfile) for syntax errors or configuration mistakes.

- Test individual stages: Isolate and test individual pipeline stages to pinpoint where the failure originates.

7. How can I monitor the performance of my CI/CD pipeline?

- Jenkins Pipeline Metrics: Jenkins provides built-in pipeline metrics that track pipeline execution duration, success rates, and other performance indicators.

- External Monitoring Tools: Integrate external monitoring tools like Prometheus or Grafana to gain deeper insights into pipeline performance and resource utilization.

8. What are some advanced CI/CD practices I can explore?

- Blue Ocean: Utilize Jenkins’ Blue Ocean plugin for a more visual and interactive pipeline design experience.

- Infrastructure as Code (IaC): Integrate IaC tools like Terraform or Ansible within your pipeline to manage and provision infrastructure alongside your application deployments.

- Static Code Analysis: Introduce static code analysis tools during the pipeline to identify potential code issues early in the development process.

- Security Scanning: Integrate security scanning tools within your pipeline to identify and address security vulnerabilities before deployment.

9. How does this approach integrate with GitOps methodologies?

GitOps, a DevOps practice that leverages Git for managing infrastructure and configuration, aligns well with this CI/CD pipeline approach. Changes to your application code and infrastructure configurations are stored and versioned in Git. Your CI/CD pipeline can then be triggered by changes in these repositories, ensuring deployments reflect the latest code and infrastructure definitions.

10. Where can I find more resources to learn about CI/CD pipelines?

- Jenkins Documentation: The official Jenkins documentation offers comprehensive guides and tutorials for creating and managing CI/CD pipelines https://www.jenkins.io/doc/

- Docker Documentation: The Docker documentation provides in-depth information on building, managing, and deploying containerized applications https://docs.docker.com/engine/install/

- Kubernetes Documentation: The Kubernetes documentation offers detailed explanations of concepts, deployment strategies, and best practices https://kubernetes.io/docs/home/

- CI/CD Best Practices Articles: Numerous online resources by technology providers and industry experts provide valuable insights and best practices for designing and implementing effective CI/CD pipelines.

Recommended Books on Software Programming: