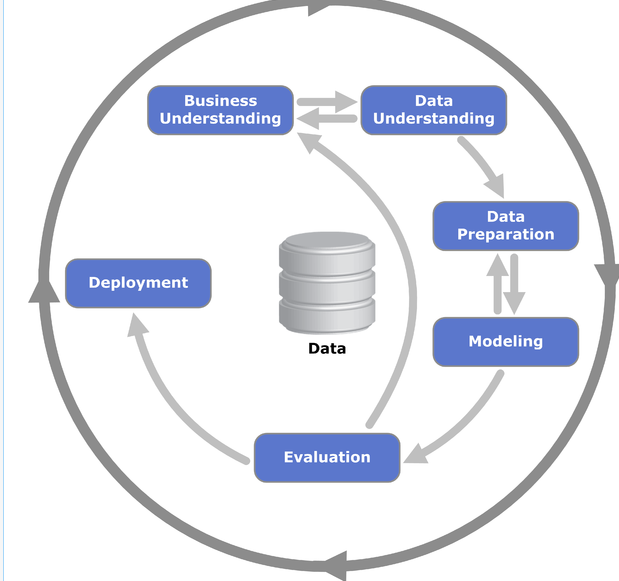

In the vast realm of machine learning, probabilistic approaches offer a powerful framework for modeling uncertainty and making informed decisions. Probabilistic Machine Learning (PML) combines principles from probability theory with machine learning techniques to provide flexible models capable of capturing uncertainty in data. In this comprehensive guide, we’ll delve into the theory behind probabilistic machine learning and explore practical implementations using Python code snippets.

Understanding Probabilistic Machine Learning

Probabilistic Machine Learning focuses on modeling uncertainty by assigning probabilities to different outcomes. Unlike traditional machine learning models that output deterministic predictions, probabilistic models provide a distribution over possible outcomes, allowing for richer and more nuanced predictions and trends.

Key Concepts in Probabilistic Machine Learning

- Probabilistic Models: Probabilistic models, such as Bayesian networks, Gaussian processes, and Hidden Markov Models, form the foundation of probabilistic machine learning. These models capture uncertainty by representing probabilities over possible outcomes.

- Bayesian Inference: Bayesian inference is a fundamental concept in probabilistic machine learning that updates our beliefs about model parameters based on observed data. It provides a principled framework for incorporating prior knowledge and updating it with new evidence.

- Uncertainty Quantification: Probabilistic machine learning enables us to quantify uncertainty in predictions, crucial for making informed decisions in real-world applications. Uncertainty can arise from various sources, including limited data, model complexity, and measurement noise.

Practical Implementation with Python

Let’s dive into practical implementations of probabilistic machine learning using Python and popular libraries such as NumPy, SciPy, and TensorFlow Probability.

Bayesian Linear Regression

python code

import numpy as np

import tensorflow as tf

import tensorflow_probability as tfp

# Generate synthetic data

np.random.seed(42)

x = np.linspace(0, 10, 100)

y = 2 * x + np.random.normal(0, 1, 100)

# Define Bayesian linear regression model

def bayesian_linear_regression(x, y):

model = tfp.distributions.JointDistributionSequential([

tfp.distributions.Normal(loc=0., scale=1.), # Prior for slope

tfp.distributions.Normal(loc=0., scale=1.), # Prior for intercept

lambda intercept, slope: tfp.distributions.Normal(loc=(intercept + slope * x), scale=1.) # Likelihood

])

return model

# Perform Bayesian inference

model = bayesian_linear_regression(x, y)

samples = model.sample(1000)

In this example, we define a Bayesian linear regression model using TensorFlow Probability, specifying priors for the slope and intercept parameters and a likelihood function. We then perform Bayesian inference to obtain samples from the posterior distribution.

Gaussian Process Regression

python code

import numpy as np

import matplotlib.pyplot as plt

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import RBF

# Generate synthetic data

np.random.seed(42)

X = np.linspace(0, 10, 100).reshape(-1, 1)

y = np.sin(X) + np.random.normal(0, 0.1, size=X.shape[0])

# Define Gaussian process regression model

kernel = 1.0 * RBF(length_scale=1.0)

gp_model = GaussianProcessRegressor(kernel=kernel, alpha=0.1, n_restarts_optimizer=10)

# Fit the model to the data

gp_model.fit(X, y)

# Predictions

x_test = np.linspace(0, 10, 100).reshape(-1, 1)

y_pred, y_std = gp_model.predict(x_test, return_std=True)

Here, we use scikit-learn to perform Gaussian Process Regression. We specify an RBF kernel and fit the model to synthetic data. We then make predictions and obtain uncertainty estimates for each prediction.

Applications of Probabilistic Machine Learning

- Uncertainty Estimation: Probabilistic machine learning is widely used in applications where understanding uncertainty is critical, such as finance, healthcare, and autonomous systems.

- Anomaly Detection: By modeling normal behavior probabilistically, machine learning models can detect anomalies or outliers in data, aiding in fraud detection, fault diagnosis, and cybersecurity.

- Bayesian Optimization: Probabilistic models are employed in Bayesian optimization techniques to efficiently search for optimal solutions in high-dimensional parameter spaces, commonly used in hyperparameter tuning and experimental design.

Real-World Applications of Probabilistic Machine Learning: Embracing Uncertainty for Better Results with Real-World Examples

Probabilistic machine learning (PML) isn’t just theoretical; it’s actively shaping various industries by providing a way to account for the inherent uncertainty in real-world data. Here are some compelling case studies that showcase the power of PML in action, featuring real company names:

1. Spam Filtering with Bayesian Inference:

Most email providers utilize spam filters powered by machine learning. Traditional approaches rely on analyzing keywords and patterns to identify spam emails. However, this can be susceptible to evolving spam tactics.

Example: Gmail (Google)

Gmail utilizes a multi-layered approach to spam filtering, with PML playing a crucial role. By employing Bayesian inference, Gmail assigns probabilities to emails being spam based on various factors like sender reputation (considering past interactions with known spammers), content analysis (identifying suspicious language or URLs), and user behavior (learning what emails users typically mark as spam). As new emails arrive, the model updates these probabilities (Bayesian update) to adapt to evolving spam strategies, leading to more accurate filtering over time.

2. Anomaly Detection in Financial Transactions:

Financial institutions face the constant challenge of detecting fraudulent transactions. Traditional rule-based systems can miss novel fraud schemes.

Example: American Express

American Express leverages PML for fraud detection in their credit card transactions. Models are trained on historical data that includes legitimate purchases, fraudulent transactions, and user profiles. When a new transaction deviates significantly from the expected probability distribution (e.g., large unexpected purchase at a location far from the user’s usual spending habits), the model flags it for further investigation. This probabilistic approach helps catch fraudulent activities while reducing false positives, protecting both American Express and its cardholders.

3. Weather Forecasting with Probabilistic Models:

Weather forecasting has traditionally provided point estimates of future conditions. However, weather is inherently unpredictable.

Example: IBM (The Weather Company)

The Weather Company, acquired by IBM, utilizes advanced weather prediction models that incorporate PML. These models predict the likelihood of various weather events (rain, snow, sunshine) for a specific location and time, along with the associated confidence levels. This allows users to understand the range of possibilities with a probabilistic forecast (e.g., 70% chance of rain) and make informed decisions.

4. Self-Driving Cars with Uncertainty Quantification:

Self-driving cars rely on complex algorithms to navigate the roads. However, real-world scenarios can be unpredictable, involving pedestrians, cyclists, and unexpected obstacles.

Example: Waymo (Google)

Waymo’s self-driving car technology heavily relies on PML. By incorporating sensor data from cameras, LiDAR, and radar, along with past experiences from previous drives, models can predict not just the most likely path but also the uncertainty associated with that prediction. This allows the car to account for potential hazards like sudden lane changes by other vehicles or obscured pedestrians by quantifying the likelihood of such events and reacting accordingly.

5. Drug Discovery with Bayesian Optimization:

The process of drug discovery involves testing numerous potential drug candidates. Traditional approaches can be time-consuming and expensive.

Example: GlaxoSmithKline (GSK)

GSK is exploring the use of PML in Bayesian optimization for drug discovery. Models can predict the efficacy of potential drug candidates based on their chemical properties and past results from similar compounds. By iteratively testing promising candidates based on the model’s predictions and updating the model with the results (Bayesian update), researchers can prioritize the most promising leads, significantly accelerating the drug discovery process and potentially leading to life-saving breakthroughs,also find courses in pml.

Conclusion

Probabilistic Machine Learning offers a principled framework for modeling uncertainty and making informed decisions in machine learning applications. By combining theory with practical implementations in Python, we can leverage the power of probabilistic models to address complex real-world problems effectively. Embrace uncertainty, and unlock the full potential of machine learning with probabilistic approaches.